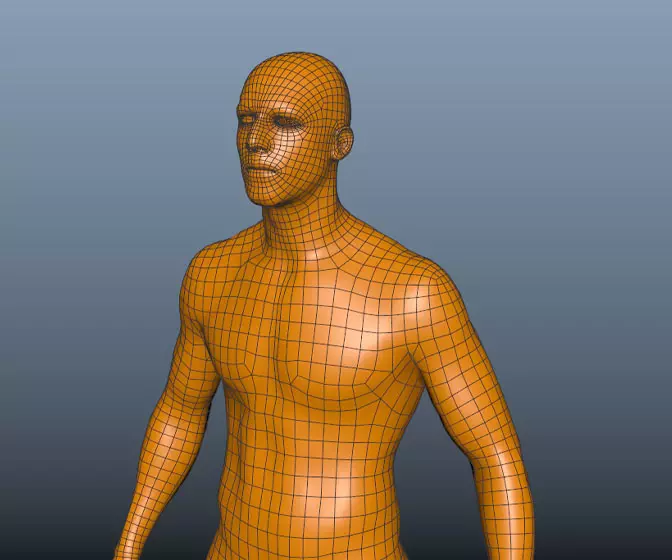

Introduction to Maya - Modeling Fundamentals Vol 1

This course will look at the fundamentals of modeling in Maya with an emphasis on creating good topology. We'll look at what makes a good model in Maya and why objects are modeled in the way they are.

#

1

20-05-2015

, 07:29 PM

EduSciVis-er

Join Date: Dec 2005

Join Date: Dec 2005

Location: Toronto

Posts: 3,374

Backburner Concurrent Jobs

One thing I haven't been able to determine yet is how to get backburner to distribute tasks from multiple jobs concurrently. There is a manager setting for "Max concurrent jobs" so it seems to me that given three jobs submitted at about the same time with the same priority, it should send out some tasks from the first, then some from the second and some from the third and carry on like that. Currently, it seems (albeit with a small sample size) that it entirely completes one job before continuing to the next.

PS I know Genny has done some work extending backburner; I may try to install the script/mod on the render machines, as it seems like it could be very useful.

#

2

21-05-2015

, 03:36 PM

I think you also misread the name of that setting. It's "Max Concurrent Assignments", not jobs. This is the number of assignments the Backburner manager can output at once, not the number of assignments it will give for each machine.

Imagination is more important than knowledge.

Last edited by NextDesign; 21-05-2015 at 03:51 PM.

#

3

21-05-2015

, 03:41 PM

EduSciVis-er

Join Date: Dec 2005

Join Date: Dec 2005

Location: Toronto

Posts: 3,374

#

4

21-05-2015

, 03:49 PM

Imagination is more important than knowledge.

Last edited by NextDesign; 21-05-2015 at 03:52 PM.

#

5

21-05-2015

, 03:55 PM

EduSciVis-er

Join Date: Dec 2005

Join Date: Dec 2005

Location: Toronto

Posts: 3,374

Job 1: 01, 02, 03, 04

Job 2: 01, 02, 03, 04

Job 3: 01, 02, 03, 04

Renderer A takes Job1_task01

Renderer B takes Job1_task02

They both finish, and are available to receive another task each. We now have:

Job 1: 03, 04

Job 2: 01, 02, 03, 04

Job 3: 01, 02, 03, 04

Currently, the manager will send out the remaining two tasks from Job 1. I would rather it send out the first task each from Job 2 and Job 3. So the jobs finish at about the same time, rather than Job 1 finishing before Jobs 2 and 3 even begin.

#

6

21-05-2015

, 04:08 PM

1) Submit each frame as a separate paused job.

2) Assign each job a decreasing priority number.

3) Un-pause the jobs.

So you would have priorities assigned in the following way:

Code:

Job1_task01: 100 Job1_task02: 75 Job1_task03: 50 Job1_task04: 25 Job2_task01: 100 Job2_task02: 75 Job2_task03: 50 Job2_task04: 25 ...

Imagination is more important than knowledge.

#

7

21-05-2015

, 04:14 PM

EduSciVis-er

Join Date: Dec 2005

Join Date: Dec 2005

Location: Toronto

Posts: 3,374

This brief section from the sparse documentation also seems to indicate that this happens:

Suspending and reactivating jobs is commonly used to quickly improve job throughput and network efficiency. For example, you might suspend one job to temporarily assign its render nodes to another that is more urgent. Or, if a particular job is taking too long, you can suspend it until off-peak hours, allowing shorter jobs to complete in the meantime. Sometimes, a low-priority job can 'grab' a processing node during the brief moment when it is between tasks—in such a case, suspending the low-priority job will return system resources to jobs with higher priorities.

I just don't understand the logic behind the task distribution.

#

8

21-05-2015

, 04:24 PM

Imagination is more important than knowledge.

Last edited by NextDesign; 21-05-2015 at 04:27 PM.

#

9

21-05-2015

, 06:31 PM

#

10

21-05-2015

, 06:40 PM

EduSciVis-er

Join Date: Dec 2005

Join Date: Dec 2005

Location: Toronto

Posts: 3,374

It seems like it requires a case-by-case monitor and management, reducing the "max servers per job" on a per job basis when the queue is long, for example.

Posting Rules Forum Rules

Similar Threads

Backburner on Windows 10

by Gen in forum Maya Technical Issues replies 6 on 19-11-2015

Backburner server only @ 50% cpu

by jsbenson in forum Maya Technical Issues replies 1 on 15-07-2011

maya2010 - backburner + AO maps

by Falott in forum Maya Basics & Newbie Lounge replies 1 on 02-11-2009

FREELANCE MAYA JOBS - Hollywood feature - work from home!

by Launch in forum Maya Basics & Newbie Lounge replies 2 on 29-03-2007

Topics

Free Courses

Full Courses

VFX News

How computer animation was used 30 years ago to make a Roger Rabbit short

On 2022-07-18 14:30:13

Sneak peek at Houdini 19.5

On 2022-07-18 14:17:59

VFX Breakdown The Man Who Fell To Earth

On 2022-07-15 13:14:36

Resident Evil - Teaser Trailer

On 2022-05-13 13:52:25

New cloud modeling nodes for Bifrost

On 2022-05-02 20:24:13

MPC Showreel 2022

On 2022-04-13 16:02:13