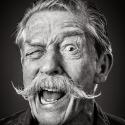

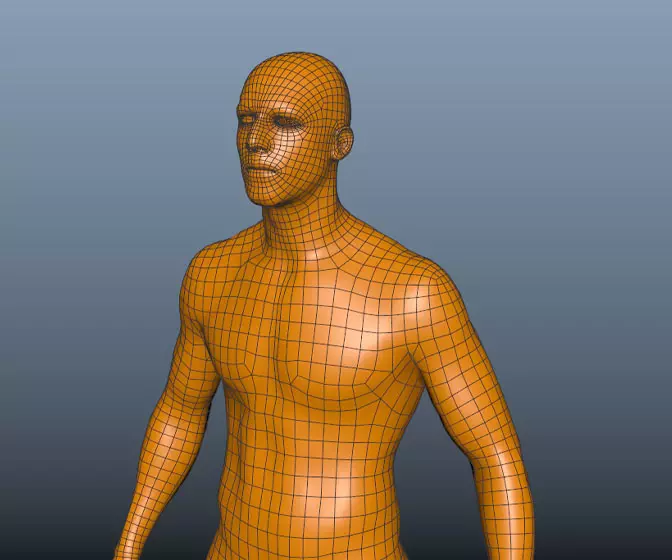

Digital humans the art of the digital double

Ever wanted to know how digital doubles are created in the movie industry? This course will give you an insight into how it's done.

#

31

08-12-2012

, 06:29 PM

Avatar Challenge Winner 2010

#

32

08-12-2012

, 06:35 PM

J

#

33

09-12-2012

, 04:07 AM

Registered User

Join Date: Aug 2011

Join Date: Aug 2011

Location: Sliema Malta

Posts: 497

On the 3d scans I wonder why such a big film is using that process. At ICT we took all the photos on one timed trigger. I realize that you would get deviation from the smaller process but on a massive budget I would think you would have had this more efficient option.

I would really like to see a vid of the scan data process in 3d coat. You mentioned it and I looked for the vid but only found a broken link. Maybe you could speed record something like I did previously so I could get a better idea of the workflow you have mentioned.

thanks

Collin (The ambitious youngin)

Last edited by Chavfister; 09-12-2012 at 04:20 AM.

#

34

09-12-2012

, 06:25 AM

I've built many 3d scanners in the past, and am in the process of writing yet another. (See https://simplymaya.com/forum/showthread.php?t=36965 for the latest) No acquisition technique is 100% effective. It's a constant trade-off between resolution, speed, portability, and robustness to certain BRDFs (material properties).

For example, some techniques are fast, but low resolution. Others are slow, but produce a huge amount of resolution. Some, like lasers, are dangerous to humans; while others are simply too large/heavy to bring to set. And let's not forget that different scanners exploit different optical properties. For example, some are more robust to specular objects, while others are more robust to projector defocus, etc.

Plus, no matter what scanning technique you use, there is no guarantee that it will be "correct". It takes a human to make it right. I'm all for technology and automation; hell, I'm a TD; but at the end of the day, software is stupid. Humans need to make sure the job's done right

Imagination is more important than knowledge.

Last edited by NextDesign; 09-12-2012 at 06:29 AM.

#

35

09-12-2012

, 06:47 AM

Registered User

Join Date: Aug 2011

Join Date: Aug 2011

Location: Sliema Malta

Posts: 497

I have made my own game engine and know much about the properties of shaders and how you mathematically calculate and derive vectors, a matrix, normals, spec, and raycasts etc. But I was curious about the reverse engineering process of how a image is taken and the process is calculated. Because you are not utilizing a said eyeLoc and raycasting into 3d space and creating the 2d imageplane pixel. But the process must be similar to create the point cloud. Does it still have many issues with BRDF's that aren't lambertian?

Are you setting up a grid and calibrating the camera off reference points? With my motion capture system I would have a grid comprised of 12 cameras and would "wand" the area to calibrate and then be able to triangulate the reflectors on my suits. Just intertesting since it is kind of the opposite side of the programming spectrum I am doing.

Last edited by Chavfister; 09-12-2012 at 06:58 AM.

#

36

09-12-2012

, 02:20 PM

Put simply, because theres a budget for it and it makes the process of creating digi doubles a bit easier. Not 'really' easy but a likeness is gained alot quicker.On the 3d scans I wonder why such a big film is using that process.

No scan is perfect at any cost. Theres always something wrong.

Well on a film, any film, you will want it as near as damn it, otherwise it will keep coming back for correcting or we'd all be sticking in generic characters and saying, 'yeah thats supposed to be Vin Diesel we just removed the hair....' Also the point of the scan data is to provide you with a best possible result, then the photo ref taken is used for textures and other additions to the process. The rest is down to the skills of the artist.How real you wish to make it is up to you.

As John has stated, time is a key factor in all of this. Sometimes the actor can only be used for like 5 mins so you may not get a scan at all and only be supplied with 4 to 5 images of that one person. I had exactly that with Abigail Breslin for Enders Game and all were out of focus too, so I had to dig up pics of her from google of about the same age to complete the job. Most studios will have a generic head to work from so the need to build from scratch everytime isnt required, though topo changes will be.

No, the work isnt hard to do, its just very time consuming and labour intensive. People need to be aware of this, thats why I always say learn the fundamentals, do it from the ground up, then when a quick button solutionn is presented then you'll know what to do when it needs fixing.

anyway another 10 pence of industry experience for you

Jay

#

37

09-12-2012

, 03:45 PM

A few are written in Java, but most are in C. Each one is a console application.Did you write the 3d scanner from scratch? A c++ app? Just curious. With the interface did you utilize a GUI library or run it solely as a console app?

Some of them are robust to them, while others make the assumption that the objects are strictly lambertian.Does it still have many issues with BRDF's that aren't lambertian?

Some are auto-calibrating, some need no calibration, while others require extensive calibration. A normal structured light application usually needs both intrinsic and extrinsic calibration, as well as gamma calibration. A coaxial setup is an example of a setup that needs no calibration.Are you setting up a grid and calibrating the camera off reference points?

Imagination is more important than knowledge.

Posting Rules Forum Rules

Topics

Free Courses

Full Courses

VFX News

How computer animation was used 30 years ago to make a Roger Rabbit short

On 2022-07-18 14:30:13

Sneak peek at Houdini 19.5

On 2022-07-18 14:17:59

VFX Breakdown The Man Who Fell To Earth

On 2022-07-15 13:14:36

Resident Evil - Teaser Trailer

On 2022-05-13 13:52:25

New cloud modeling nodes for Bifrost

On 2022-05-02 20:24:13

MPC Showreel 2022

On 2022-04-13 16:02:13