Join Date: Mar 2006

,Pat

Join Date: Mar 2006

,Pat

I havent heard of anything like that but who knows it could be true..

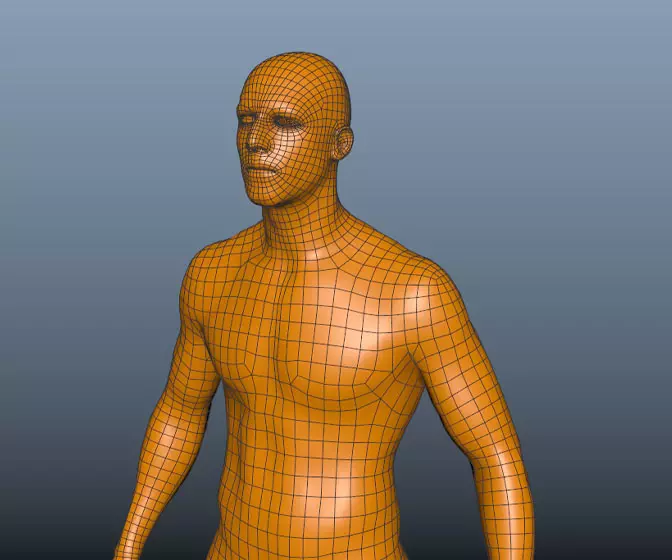

I guess its just how well you set up your mesh.

Join Date: Mar 2006

Join Date: Mar 2006

,Pat

Join Date: Aug 2004

Back in the GeForce 3 era we had "nonlinear polygon edges" from nVidia -- which joined "3DNow(here)!" in the pit of forgotten code because it was too damn hard to code.Originally posted by concorddawned

For times like these and the technology we have at hand, really this shouldnt be the case. I wish that hardware companys would put there ass into gear and make the hardware that can smoothly view high polycount characters.

Why do we have to suffer with low quality images...

Though -- allegedly PixelShaders4 (or 5) are ressurecting them... right when PerPixel lighting makes it unnecessary...

Perfect timing MS!

Join Date: Mar 2006

,Pat

Sounds good......and sorry to bother everyone, but I got one more question that I asked in another area. But I have heard that render time for NURBS and Sub Div's in animations are crazy long (especially with it running 30 frames per second). Even with render layers, how long would you estimate for render time to be for, let's say, a 30 second long animation (about the length of a cut scene)?

my cpu is not the best and a 3 second fire animation that i did of this site took me 12 hrs and it still didnt finish all the way. i am also useing PLE.

While it is true that you can do whatever as long as the end result is polygons, I can't help but recomend just trying to make it straight from polys to begin with. The reason I say that is because in the game industry, you are always given a polygon limitation and if you are converting from nurbs or subDs or whatever, the resulting polygon count you get is pseudo random. And I'd say definitely not the most efficient. Which then would result in a lot of time cleaning up the geometry.Originally posted by phornby

For gaming art, since I can work in NURBS and then convert to polygons at the end, will I loose any details if I fix it up good in Sub Div's and then convert to polygons? May be a stupid question.

,Pat

So, personally, I recomend starting and ending with polygons for serious game art.

Join Date: Mar 2006

,Pat

Join Date: Oct 2005

Cause i first used blender so im used to polygons.

By the way nice pics on your website mckinkley

Last edited by Gamestarf; 09-04-2006 at 08:35 PM.

not that my raytracer is great (i havent posted this in the wip section b/c i think its insignificant) but I did write scripts for triangle raytracing, and, raytracing mostly is per pixel.

However, using a rather complex algorithm that I found on the web and adapted, I wrote triangle gourard shading for my raytracer. The results were dramaticly faster.

I didn't save any pics, but a triangle mesh w/ 150 triangles and standard raytracing (per-pixel shading) rendered just as fast as 7000 triangles gourard shaded. wow.

Well, to really test speeds, I did this: 30,000 triangle mesh (really hi-poly sphere

) normal raytraced = 6.5 minutes

) normal raytraced = 6.5 minutesAnd my triangle intersection method is VERY fast, as it uses baryocentric coordinates and no sqrt functions ( that take about 300x more than a + or -)(normal triangle intersection tests use 3 of those). Mine simply uses a bunch of plus or minus.

Now compare that to gourard shaded, which tests each triangle only once by using intersection caching(really simple really). Thats 30,000 triangles= 19 seconds.

And that's with no kind of spatial subdivision scheme- which generally improves rendering by thousands of times.

NOTE: I Didnt post thesse in my wip thread because i copied most of the code. The ones I did paste were entirely written by me.

So now, throw in an octree structure- takes about 1.5 seconds to calculate(for the 30,000 tris);

30,000 tris perpixel shading = 48 seconds(49.5 total)

30,000 tris gourard shading = 2.8 seconds(4.3 total)

So that's my opinion about per-pixel shading. but, I don't know how it works in games, that's just how my raytracer does it.

Live the life you love, love the life you live