The actual teapot was a plain white object made by German company Melitta in 1974, which Newell and his wife had bought at a department store in Salt Lake City. These days it can be found at the Computer History Museum in Mountain View, California, together with the original drawings created by Newell as he modeled the teapot by eye before digitizing it. He also created a cup with saucer and a spoon model to go with it.

Apart from the fame the teapot is a good object to use for test rendering and benchmarking when its shape with a spout and handle allows for self-shadowing and the curved surfaces are good for reflection tests, and this is the reason it can be found in 3DS Max to this day.

The teapot has received tribute in many places, in the original “Toy Story” it appears as Buzz is having tea with the headless dolls. Pixar also have a tradition where they give away a novelty toy version of a walking teapot at the annual Siggraph as a promotion for RenderMan. For Newell he is quoted with saying in the late 80’s that after all he’d done for computer graphics the only thing he’d be remembered for would be “That Damned Teapot”.

Many people have opened 3DS Max over the years and wondered why along with the common primitives like the cube, sphere and cone that can be used as starting shapes for modeling you find a teapot.

The “Utah Teapot” is an icon of computer graphics being one of the first 3D models modeled using mathematical curves rather than polygons, and in early computer graphics labs it was used as a test model for many algorithms. It was developed by Martin Newell while working at his PhD thesis at University of Utah in 1975, at that time there were no 3D modeling programs so everything was either digitized by hand or sketched on graph paper with the numbers being typed in using a text editor, which meant it quickly became a popular and commonly used model by other scientists in the field.

The University of Utah was an important place for the development of computer graphics in the 1970’s, this is also where the first ever car scan took place, just three years before the teapot was made. Something we take for granted with modern technology was a monumental task in 1972, prior to 3D modeling programs which were being invented at the time, the scan required two things: an object, which was a Volkswagen Beetle, and a scanner, which was a group of students with yardsticks to take the measurements.

The Volkswagen Beetle was a 1967 model and belonged to the wife of computer graphics pioneer Ivan Sutherland who led the team of students during this era as they were developing some of the first 3D display algorithms and polygonal mapping techniques to approximate complex surfaces.

The polygons were mapped out on the surface of the real actual Beetle and every line was measured and the data entered into the computer programs which produced the world’s first 3D wireframe model of a car, which was also the first 3D model ever created of any physically-based object. The model was without wheels and bumpers and only contained the outer surface and became incredibly valuable as test data for the team as well as to try early rendering techniques, and the original data can still be found on the internet today. Bui Tuong Phong created the first computer generated image that looked like the physical model with a render of this Beetle, as he was developing the Phong Shading and Reflection model at the time.

Sutherland’s group of students who worked on the Beetle project included several people who later would become very important people in the development of computer graphics, among them were future Adobe co-founder John Warnock and famous computer scientist Alan Kay who coined the phrase: “The best way to predict the future is to invent it”.

Another student at the time was Pixar co-founder Ed Catmull, who is today the president of Walt Disney and Pixar Animation Studios. He created his first important contribution to computer graphics in the same year as the Volkswagen Beetle model was made, with the film “A Computer Animated Hand”.

The hand model, for which he cast his own, was mapped into polygons using the same technique as was used to construct the car model. Upon graduating in 1974, Catmull went on to work at the computer graphics lab at New York Institute of Technology which became the computer graphics division of Lucasfilm before it was bought by Steve Jobs to form Pixar in 1986.

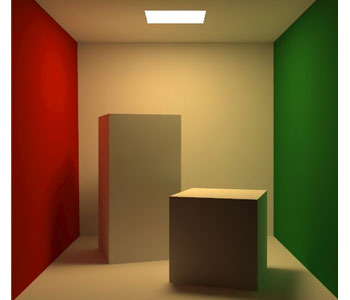

Another common model to come across is the Cornell box, which is used a lot for tests of global illumination. As indicated by the name, it was first created by a team of scientists at the graphics department of Cornell University in 1984 when testing new raytracing algorithms for their paper “Modeling the Interaction of Light Between Diffuse Surfaces” which was presented at SIGGRAPH that year.

The Cornell box was constructed as a physical model at the university, with a red left wall, a green right wall and a white back wall, floor and ceiling with the only light source coming from above in the center. Various objects can then be placed inside it, originally it contained two white boxes. This design shows diffuse reflection with color bleed, where light reflects off the red and green walls bouncing onto the white and giving it a tint of color. Actual photographs can then be compared with scenes rendered of a computerized version of the model to determine the accuracy of the rendering software.

Cornell University is known for its research on physically based lighting, and built a complete light measurement laboratory for research into the control of light. The box is still used today in research and for demonstration purposes in online tutorials, more often without actual camera data from a physical model but simply for its visual properties.

In the 1990’s the graphics department at Stanford University put out the Stanford 3D Scanning Repository, which is a series of test models created based on scanning data from real world objects using a Cyberware 3030 MS Color scanner. At this point in time many new surface reconstructing algorithms were being developed and the purpose was to make detailed reconstructions and range data available to the general public and other researchers who would not have access to the expensive high-end scanning facilities required to create these models.

Most of the objects in this collection are of religious or cultural importance, and can only be used in good taste, for example the Stanford Dragon which is significant in China where it’s taboo to disfigure a dragon that is the historical symbol of the emperor and many other things. The original file contains 871,414 triangular faces, but various reduced polygonal meshes can also be found around the internet.

One of the few models which can be disfigured in nasty simulations and morphs without implications, also perhaps the most famous, is the Stanford Bunny. It was made by Greg Turk and Mark Levoy in 1994 using a technique they’d developed for creating polygonal models from range scans.

A range scan is a grid of distance values that tell how far the points on a physical object is from the device that creates the scan and it can be visualized as a 2D image of pixels where each pixel contains a distance value instead of a color. In a black and white image shorter distances would appear as bright pixels and pixels farther away would become darker. Multiple of these scans usually have to be combined together to capture the full geometry of an object, texture data can be captured as well with color scans.

The Cyberware scanner used at Stanford moves an object through a sheet of bright red laser light to create the range images, the laser sheet is created by sending the laser beam through a cylindrical lens. As the object passes through the laser the light wiggles which gets captured by a video camera.

In order for an object to be visible to the camera it must reflect red laser light, a black object would absorb all light and therefore not be visible, also bright blue or green objects wouldn’t scan well under these conditions as little red light is reflected. False depth readings can also be caused by very specular objects, as part of these objects can be indirectly illuminated through reflection.

The object needed had to be diffuse and reflect red light well, at the time it was close to Easter holidays and Greg Turk was out shopping when he found a collection of identical terracotta bunny rabbits in a home and garden shop and being made of terracotta it was the perfect object, so he brought one back to the Stanford Graphics Laboratory for scanning.

A collection of ten range scans was then merged together to create the polygonal mesh which consists of 69,452 triangles and it became the Stanford Bunny, the physical model still resides at Stanford today. It’s fairly low-poly by today’s standard, and has five holes in it, two are original at the base because the real model is hollow, and the other three, one on the chin and two on the base are a result of no scanning data from these locations, these types of holes being common with 3D scans.

The Stanford Bunny has been used in a wide range of research tests for things such as polygonal simplification, surface smoothing, texture mapping and non-photorealistic lighting.

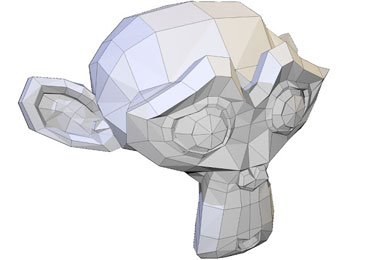

On a final note and in contrast the teapot primitive in 3DS Max, open source software Blender has something much more original – a 3D model of a chimpanzee. Blender, which was originally developed as an in-house application for Dutch animation studio Neo Geo and Not a Number Technologies, added the chimpanzee as an easter-egg and last personal tag of the developers in 2002 as it was clear the studio was shutting down for good. They named her Suzanne, after an orangutang in the Kevin Smith film “Jay and Silent Bob Strike Back”, and she’s been a part of Blender ever since.